Andy Cheshire looks at the Quality of Apprenticeship Provision

During 2018, OFSTED (@ofstednews) have been making judgements of ‘Insufficient’, ‘Reasonable’ or ‘Significant’ on new providers on the Register of Apprenticeship Training Providers against three key areas; Leadership, Quality and Safeguarding, to ensure new providers are up to scratch. The data thus far, from 59 providers, allows us to do some headline analysis to help inform future policy in this area.

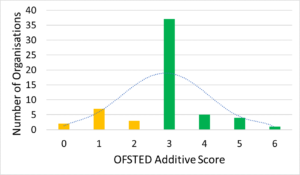

To allow any sort of meaningful analysis, the data needs to be turned into a score, so I have assumed a value of 0 for Insufficient, 1 for Reasonable and 2 for Significant for each of the three areas, and assumed all are equally important, and added the scores. So a first analysis we might do would be to plot number of training providers achieving each score:

Scores shown in green are all ‘Reasonable’ or more for all three attributes for all data. So the audits by far have found the majority (63%) to be Reasonable against all three areas, with a few beating the norm or falling short of the required standard. The blue line, incidentally, is a plot of what a true normal distribution would show for the same data set, which shows that can see that the scores of 2 and 4 are under-represented.

From a Six Sigma viewpoint, if we look at the customers being Employers who are receiving an apprenticeship service, then they should expect no scores of 0 in any of the three areas, with the process that produced this output being the RoATP tendering process. A measurement of a ‘defect’ at any of these zero scores gives a Defect per Opportunity (DPO) level of 13%, or a 2.6 Sigma level, which is not quite as good as airport baggage handling, at 3.2 Sigma! (Incidentally, deaths due to airline crashes, where quality needs to be greater, operates at greater that 6 sigma). Perhaps this outcome is not a surprise as the tendering process was a desk based exercise and a failure rate of 20% of new providers was probably to be expected but may inform thinking for future tendering? A 20% rate would put an estimate of some 240 of the new providers either falling by the wayside or needing to make improvements before achieving a Reasonable judgement across all three areas, and of course being subject to a full OFSTED inspection within 6-12 months after the initial report.

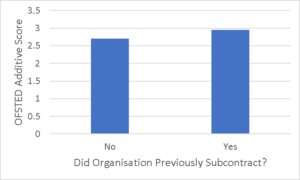

So is experience of delivering apprenticeships as a subcontractor a positive?

The summary data (below) shows a higher score for those organisations with previous experience, compare to those that don’t. This is to be expected as it generally takes a number of years of organisational learning to get good at the many facets of apprenticeship delivery.

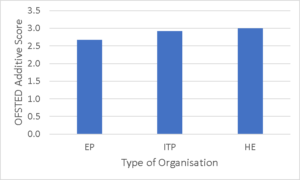

The next analysis we can look at is by looking at type of organisation; HE (Higher Education), ITP (Independent Training Provider), EP (Employer Provider):

Here we see Higher Education institutes with the highest score, Independent Training Providers next highest and Employer Providers a the lowest score. Note that there were only two HE providers inspected so the sample is not yet representative but we perhaps would expect them to have experience of all three elements, even if not specifically in apprenticeships. And of the nine employer-providers inspected, we would certainly expect that many are on an FE learning curve, hence not surprising that the score is lower – this was always part of the risk of widening the pool of providers.

Other analyses show:

There was no significant change in average score over time – this could mean both that the providers already sampled have genuinely been random, also that OFSTED are keeping their judgements consistent over time.

Also there was no significant difference in score by number of apprentices, by which we can draw the conclusion that there is both good and poor practice present at all levels of delivery.

Many thanks for @feweek for publishing the summary data used in this analysis in their article on Early Monitoring Visits